Privacy and data governance¶

Trustworthy AI must ensure that privacy, as a fundamental right, is respected for all parties – users, individuals targeted or employees. Quality and integrity of the data must also be protected, and access to data must be regulated.

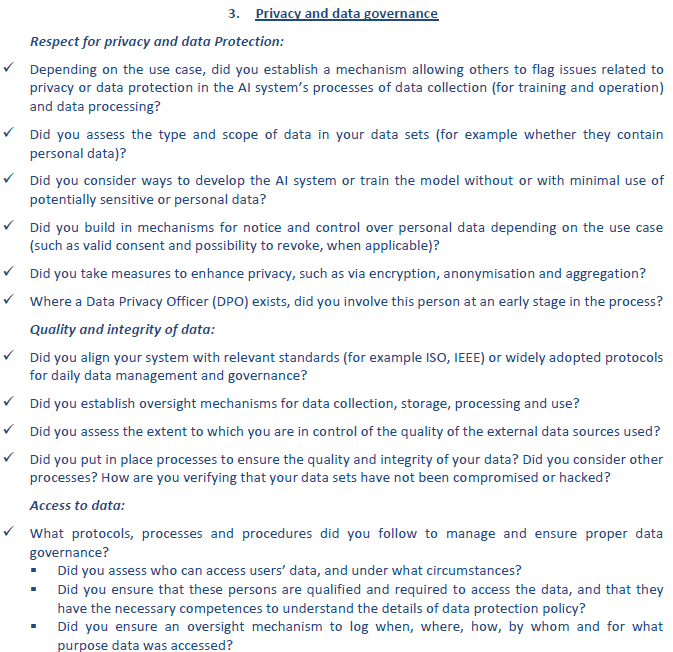

Respect for privacy and data protection¶

Any system gathering data must ensure that the consent of the user is respected, and his privacy protected.

Concretely, it means setting up the following lawful & secure process upon a new business objective involving personal identifiable information (PII) or personal health information (PHI):

Step |

Description & action |

|---|---|

Business objective |

What is the end business goal of the analytical initiative? This step conditions everything else as a strategy is derived to design the relevant AI system to answer the business challenge. |

Pre-work: data classification |

Document the kind of data that needs to be collected: does it include PII or PHI or both? |

Pre-work: minimization principle |

|

Legal base of the business objective |

Specify the legal framework to which the use case is bound: |

Data handling policy |

Document & implement security measures: |

Quality and integrity of data¶

To avoid any harmful consequence of an AI system, the quality and integrity of the data must be ensured at any time

Relevant standards and protocols for data management must be put in place

Data owners, responsible for the data governance in their functional scope, must be appointed

The data quality must be controlled – including from external data sources. Wrong personal data could cause harms to the person targeted. For instance, duplicates or wrong format should be avoided. Great expectations library can help you implement these quality checks.

Protection against hacking and verification that the data is not compromised must be implemented. Data must be stored in a protected location

Access to data¶

As a general principle, only people with valid reasons should access each dataset

AI contributors must make sure they get security clearances from their legal departments before starting to collect the data

Each dataset containing private or sensitive data should be protected individually, with access rights given on case by case

Each person asking for personal or sensitive data should have the agreement of DPO (if existing), or justify the need. They must follow data protection policy in place

If possible, an oversight mechanism should be put in place to log when, where, how and by whom each data was accessed

Definitions¶

Encryption: the process of converting information or data into a code, especially to prevent unauthorized access

Anonymization: it is the process of protecting private or sensitive information by erasing or encrypting identifiers that connect an individual to stored data

Aggregation: it is the process of aggregating several data entries together before ingesting in a system, to avoid using and accessing specific personal data

Appendix - Recommendations from the EU¶

Below are the recommendations directly reported from EU.